The Idea That Revolutionized Online Video Streaming

The year was 1998. U.S President Bill Clinton was one year into his second term when the scandal that would overshadow his presidency broke. He was set to stand before a grand jury and testify, and the nation was set to watch, some on their huge cathode-ray tube computers with a little help from the World Wide Web.

A few months later, on February 5th, 1999, the "Victoria's Secret Fashion Show" took the same approach. They decided this would be the first year they would broadcast the show over the web. It accrued 1.5 million viewers. The rush to online video began. Webcasts at events exploded in popularity. Consumers were hungry for internet video. There was just one problem. Streaming video in the late 1990s was an excruciating experience.

The Streaming Media Expert Dan Rayburn says, "think about trying to watch a video where you're getting maybe one frame every two or three seconds, and it's the size of a postage stamp. No joke. I mean, that's how small the window was, and it didn't work well; too many people tuned in, and there wasn't enough infrastructure capacity at the time."

Streaming couldn't be a mainstream tool until these major quality hurdles could be overcome. And that wouldn't be possible until the groundbreaking introduction of the CDN.

The unexpected influence of lesser-known inventions and the creative moments made them possible.

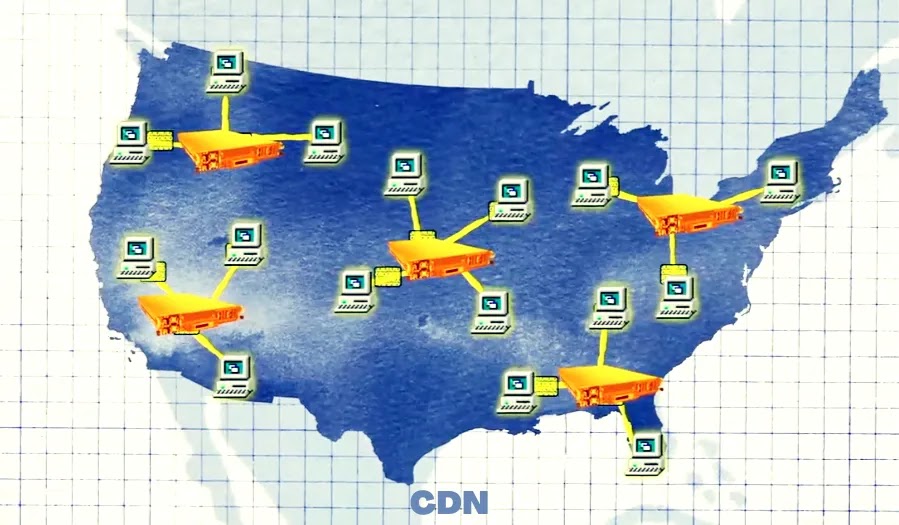

Though we like to think of the internet as a nebulous cloud floating above, it's much more physical than we give it credit for. And in the early days, the physical limits of the internet were inescapable. If too many people visited a website simultaneously, congestion would overwhelm the servers. Then, there was the issue of geography. The internet is a network of networks. Suppose you're trying to retrieve packets of information, like a webpage, whose servers are located geographically far away from you. In that case, your computer has to send a request that travels through different layers of the interconnected chain to retrieve that webpage.

Once that request is received, the server can approve the request and start sending individual packets back through the chain. These packets must travel the same long distance from the server back to your computer. Though it's not always the defining factor, the farther away, a server is, the longer it takes to retrieve the packets that make up a webpage. For a consumer, it means longer loading times. This works okay for static web pages, but it would be a problem for any large file. And video required some heavy lifting.

In 1995, streaming video was a dream at best. The internet just wasn't built for it.

Dan Rayburn adds, "back then, object sizes on the internet were very, very, very, very small. There was no video. There were no software downloads. There were no "Call of Duty" patches that were gigantic. It was all JPEGs; it was GIFs. Maybe it was JavaScript, or it was some HTML code. It was very, very small objects."

Video, by comparison, is massive. A short video clip could be 1,000 times the size of a photo or more. Because of this, streaming video struggled to become mainstream in the '90s. At the time, the best way to transfer video was to host the file on a server and have the recipients spend a substantial amount of time downloading it.

Dan Rayburn mentions, "When it came to downloading videos, many times that was done simply because of the connection you were on, you wouldn't be able to get the video streaming with enough what we call FPS or frames per second to where it would be smooth. It just made more sense for you to download the video and wait till it was done. Then you could play it on your computer locally without any stuttering or technical issues."

It worked, but it was a far cry from high-quality streaming video. For streaming quality to improve, videos needed to start up faster and be more reliable. And computer engineers were up to the challenge. They created a solution called a content delivery network, or CDN for short, revolutionizing the internet. Sandpiper, InterVU, iBEAM, and many other small companies were in the first wave of folks to experiment with the idea of a CDN.

MIT applied mathematics professor and algorithmic expert Dr. Tom Leighton was sitting down the hall from Tim Berners-Lee, the man many call the father of the World Wide Web. Berners-Lee posed a problem to the wider MIT community. Because of how the web was built, congestion was bound to become an issue that held people back from speedy web browsing. Dr. Leighton felt he could solve this congestion with math using algorithms to duplicate and route content intelligently. He and a few colleagues got to thinking.

If geographical distance was the problem, get rid of the distance. Replicate the content and bring that content closer to people. This lessens the number of hops that computers would have to make to retrieve information and reduces the likelihood that any server would get overwhelmed.

Leighton called his company Akamai, and it's still one of the largest CDN companies in the world today. CDNs are only one piece of the puzzle, however. They help bring the content closer to you, allowing it to load faster, but making that content seems good is a different challenge.

The secret ingredients for getting high-resolution streaming video are bitrate and compression.

Bitrate essentially is just a measure of how much of a file you can transfer in real time, usually measured per second; larger files are generally higher quality, but streaming large files takes more bandwidth. In the video, bitrate is everything.

Dan Rayburn claims, "When we were on dial-up, there was a limit to the number of bits you could push through the wire to the consumer. It was a physical restraint."

In the early days, bitrate was very low, mostly due to physical constraints. But even with those limits, internet video exploded in the early 2000s, and no platform took off faster than YouTube.

Founded in 2005, YouTube democratized video-making. Its platform was reliable for the time but wasn't without its faults. If you were on YouTube in those early years, you'd probably remember watching a video like this.

Buffering was the absolute worst part of YouTube or any other streaming video platform in those early days.

Dan Rayburn says, "Many times in the early days when you clicked play, you would have to wait for five, six, seven seconds for it to start up, and what was happening was you had to wait for enough bits to get from wherever they were to your location. And then many times we had rebuffering, which was okay, you watch 10 seconds, and oh, it froze. You had to wait for the package to come back to you."

There was a simple reason this was happening.

Dan Rayburn again adds, "If you were encoding a video, let's say, for 300 kilobits per second, that's the only bitrate you were encoding it for. So if at any time, while you were watching that video, your bandwidth dropped below 300 kilobits per second, the video would stop, and it would rebuffer."

And with that realization came a simple but game-changing solution.

Dan Rayburn says, "When adaptive bitrate came around, which we call variable bitrate, content owners started encoding the video in 300K, 500K, and 1 Meg. So the point was if you're watching it at 500K and your bandwidth degraded, instead of that video stopping at 500K, it would reduce the bit rate to 300K, but keep playing."

Or, in simpler terms, when you're watching a YouTube video in high definition and your connection weakens, the player will automatically drop you down to a lower resolution to ensure that the video keeps playing.

Dan Rayburn states, "The adaptive bitrate improved the customer experience because what happened when we could get rid of an industry the rebuffering? Consumers watched more content. So adaptive bitrate is the man that might have to be on top of my list of one of the best technology advances for the industry."

But having a solid bitrate is only one part of the two-part secret sauce for improving the visual quality of internet video. The other part is compression and decompression, referred to as codecs.

Dan Rayburn continues, "Here's the example I like to use for people who aren't technology savvy. It's like frozen concentrated orange juice, okay? This sounds a little odd but bears with me. So you get frozen concentrated orange juice, right? And it's very concentrated. But then, when you put it in water, it expands and has a larger volume. And video's kind of the same way. It starts where you have to compress it. And then once you get it to the device that you're playing it back on, it decompresses it, and it's better quality."

There are two main ways to do compression. Lossy and lossless. Lossy compression reduces the file size with an obvious reduction in quality. Lossless compression reduces the file size without losing any quality. Lossless compression is the kind that video distributors like because it makes for a better user experience. Each frame relies on redundancies to determine where it can remove information.

So, for example, Instead of redrawing a new pixel for each frame each time, good compression allows the pixels in the background that aren't changing much to be remembered and reused for the following frames. Only the portions of the frame where lots of movement occur are redrawn with each frame. This results in a high-quality video at smaller file size. For internet video to be as efficient and high-quality as possible, all of these technologies must work together.

Here's how they do it. Let's say Netflix is preparing to release the newest season of "Stranger Things." After it's edited and ready to be uploaded to the Netflix server, it has to be compressed. A team at Netflix works frame-by-frame to figure out the best compression method.

Dan Rayburn says, "They have an entire team over there that looks at how do we encode video frame-by-frame, whether it's based on lighting, whether it's based on colors, all these different variables."

They compress that file into the smallest form it can be while retaining the highest quality it can. They also encode it at lower bitrates, aka lesser quality, to give a few possible options in case your internet connection doesn't allow for the highest resolution, giving consumers the flexibility to take advantage of adaptive bitrate. These files are encoded into different specialized formats for every device the content could be played on.

Dan Rayburn conveys, "It's not uncommon for many companies to have between 8 to 12 different encoding profiles inside the ladder to constantly try to get the best quality you can."

All of these files are then uploaded to Netflix's network. Seeing as "Stranger Things" is one of the most watched shows on the platform, they expect people to watch it as soon as it launches. In preparation for this, they ensured that a copy of the files for each episode was replicated across all the CDN servers they have strategically located across the globe. These servers act as a local hub where the file will be stored whenever a nearby consumer wants to retrieve it.

The content is saved on multiple edge servers so that when a multitude of people try to access it, each individual can access the server that's geographically closest to them, resulting in the least amount of lag between when you click the play button and when the content starts playing. All this happens in advance so that it loads virtually without delay when you click that video.

Of course, Netflix and other streaming services don't do this for every show. They strategically replicate the content they expect most people are going to watch. If you pick a show, however, that hasn't been saved on the server closest to you yet, it might take a few seconds longer to load that first frame because your device might have to request that content from a server that's much further away, potentially to the main origin servers in LA.

It isn't a significant amount of time. You'd probably barely notice. But thanks to you, that piece of content is now saved on a server nearby. If any other local consumer decides to watch the same movie or show, it will load virtually without delay. These technologies come together to deliver an experience that works almost like magic.

Dan Rayburn questions, "When was the last time you complained about the video quality on Netflix? Probably never, right, or not in a long time."

Streaming video on the internet is objectively amazing. It seems like it got good overnight, but it's taken 25 years of experimentation, collaboration, and lots of learning to get it to its current point. So, how does streaming change in the next 25 years?

Expectations suggest that the industry keeps pushing technology forward in different ways. Possibly the biggest driver of technological growth might be the mobile industry. In the past few years, internet video has seen explosive growth in the mobile sector, thanks to apps like Instagram, TikTok, YouTube, and more. This continual shift means more of a focus on how to make mobile video content better.

One way this could manifest itself is better compression for higher quality videos at smaller file sizes. This would keep bitrates low while increasing resolution over a cellular connection. But in terms of watching content on your home TV, the next upcoming technological shift you may notice is content that loads even faster.

Dan Rayburn says, "Today, as an industry, we're trying to improve on the startup time or what we call time to the first frame. How long does it take to see that first frame of the video?"

Samsung and other TV manufacturers have released 8K TVs in recent years, doubling down on the idea that increasing the resolution will be a defining factor for the next wave of streaming technology. But not everyone feels that way.

Dan Rayburn says, "8K I think personally makes no sense in the market in any way, shape, or form. The problem with 8K is that it doesn't add any benefit. The type of content we're watching and the devices we're watching it on adds no benefit. People don't want to replace their TVs with all their other hardware. There has to be good business logic behind this as a consumer; there has to be something that's driving it. I think where the industry is right now, as far as 4K being the max purely from a resolution standpoint, I don't think it goes past there. I don't think it goes past 4K from an adoption standpoint because there won't be a benefit."

The idea that we might be hitting the pinnacle of video resolution is a testament to how far the technology has come from its choppy, postage-stamp-size inception. So, the biggest strides in the next 25 years of streaming might be making the rest of the experience as frictionless as possible.

Dan Rayburn says, "It's not always about, let's push out better quality. It's what defines a user experience. We're also seeing technologies that provide immediate benefits, like voice control. And voice control is not something you hear a ton about. I think many consumers take that for granted. However, it was interesting to have Hulu and Amazon on my stage at one time, talking about consumers who were consuming three to four times more Hulu content than those who weren't using voice control. And that's an interesting point. Why is that happening? Well, simple, easy to use. Consumers want something easy. If it's easy, they'll use it more. I think the future is more in voice control than machine learning or AI."

While improving the quality and accessibility of streaming services on mobile and TV will be a priority in the coming years, we are starting to see that this is just the beginning of the streaming revolution. Industry leaders are already working on the next frontier of streaming, video games. The goal is to stream entire games as easily as you stream a show on Netflix.

Today, while most games allow for online social interaction, each requires players to download huge files, like maps, characters, props, and textures, all stored locally on their system. Cloud gaming would unleash you from huge downloads and upload files of this kind. Though it would rely on similar underlying technologies, substantial hurdles must be overcome.

Unlike movies, video games generally give each player a different point of view. So, not everyone is looking at the same images. Most games also allow players to make decisions that influence the visuals in somewhat unpredictable ways. Just with that, the technical complexity needed to pull off streaming a video game as opposed to a movie is exponentially greater.

On top of that, gaming requires a super-fast connection that minimizes any perceptible delay between when a player clicks a button and when that corresponding action happens on screen. Companies like Sony and Nvidia have been working on cloud gaming for years.

Google and Microsoft have recently entered the arena with Stadia and xCloud. With their inroads into the internet and infrastructure, there is renewed hope that the industry-wide revolution could be on its way. But this is just the start. Suppose we can build a robust cloud gaming infrastructure. In that case, that could be a stepping stone in taking VR and AR technologies to the levels we've seen only in sci-fi movies. Imagine attending a concert from home or getting a front-row seat to the Super Bowl without having to leave your couch. This would revolutionize the way that we consume sports and entertainment.

Beyond that, it could revolutionize the way we live. Instead of discussing how the volcanic activity works in school, you could put on a headset and walk around a volcano in VR. Instead of going to school or work, we might just put on a VR headset and remote into our digital school or office. At a certain point, we could be in a "Ready Player One"- Esque world where there's a virtual oasis waiting for us with just the tap of a VR headset.

All of that is a long, long way off. However, the technology we've built and refined to stream our favorite shows could one day be the backbone of a much greater system of immersive experiences. As we move closer to a future in which video, video games, and possibly VR will dominate the internet landscape, it's evident that the next frontier for streaming will necessitate a significant amount of new infrastructure and inventiveness.

Fortifying access to reliable broadband internet will be paramount. Making these services affordable will be another challenge. But after we overcome these challenges, streaming's full potential might be fulfilled, making it a technology that would shape our future.

Very well written article by the author, thank you very much for sharing the knowledge. I was lucky enough to get one of the finest services from Webstream Communications in video streaming services.